Last Updated: December 18, 2021

![]()

Multiple Object Tracking (MOT) involves tracing the motion of an object (or many objects) across frames in a video stream. This is done by maintaining an index of an object or multiple objects and associating the index to the object as it moves around. Compared to object detection, predicting the future motions and re-identifying an object across frames is crucial to building an efficient tracker.

The current MOT techniques can be grouped into 2 classes: Detection-Based Tracking (DBT) and Detection-Free Tracking (DFT).

Detection based tracking techniques utilise object detectors to find the objects in the scene and then trackers are created for these objects. Unlike DBT techniques, Detection-Free Tracking requires a manual initialisation of the objects which it then tracks throughout the video.

DFT techniques have their niche usecases as they are limited when it comes to real world applications e.g they cannot deal with newly discovered objects and disappearing objects.

MOT tasks are either solved in an online or offline manner.

In an online solution, the trackers process frames sequentially and have no access to future frames (but have access to past frames), this is useful for real time MOT.

Compared to offline solutions where the order does not matter and the trackers have access to all the frames of the video.

Detection, Motion Prediction and Re-Identification in Multiple Object Tracking?

These are the 3 key steps in building a multiple object tracker. This article focuses on understanding how to track moving objects with a moving camera, there is the stationary camera + moving objects scenario which is not covered here but some techniques mentioned are shared across both problem domains.

Detecting Objects of Interest

Deep Learning methods have been the state of the art, excelling above traditional detectors such as Histogram of Oriented features (HOG) or cascade detectors. Common deep learning models used are YOLO (See tutorial on YOLOv1 for background), Faster-RCNN, EfficientDet etc.

Predicting Object Motion

This involves predicting where the object would be in the next frame. A naive assumption that works in a lot of cases is to simply assume the next object location is close to its previous location (similar to how optical flow is calculated), this works well in video streams with a high frame rate and with objects that exhibit patterns in their motions.

A kalman filter is an example of a traditional algorithm that can predict the motion of objects according to an observed model (with robustness to noise). Deep Learning models that work well with sequential data such as recurrent neural networks (LSTMs, BiLSTMs etc), attention networks have also proven to be highly effective in predicting object motion.

Object Re-Identification

Given detections for the current frame, object re-Identification, is the process of matching the detections to previous detections from earlier frames.

A Track consists of the detections for a particular object across frames. It represents the historical locations (and any other metadata e.g velocity, acceleration, ID etc) of the object for each frame that has been processed.

Neural Networks excel in this problem; They can be trained to process input images (usually the results of object detections) and output vectors that represent the input object in a multidimensional space. A well trained network would produce vectors that cluster together for the same object and vectors that are far apart for different objects in multi-dimensional space.

What to do with re-identified objects?

The re-identified object can either be

- Assigned to an existing track:

This can be based on a custom metric that utilises the predicted motion and re-identification values/ - Eliminated:

This depends on the target usecase and can occur when the tracked object moves outside the field of view.

An example, is where a certain region in the video has been denoted an exit and detections or tracks are terminated when the object enters the region.

A track is also eliminated if no new detections occur after a certain threshold e.g after 10s or 20 frames. - Added to a new track:

Occurs when a new object enters the field of view of the camera.

Using Deep Learning for Multiple Object Tracking

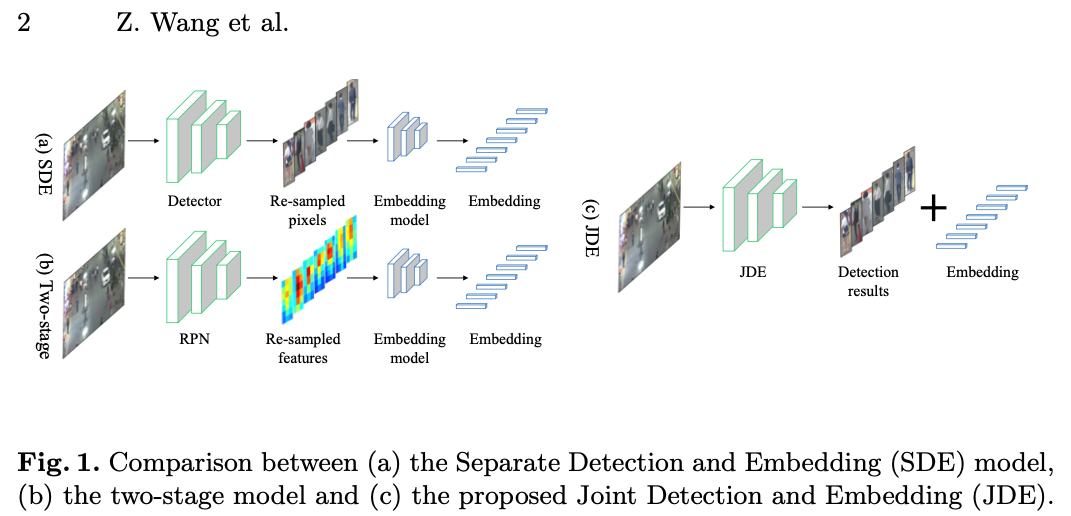

In the paper by Z. Wang et al (2020), they highlighted 3 main types of deep learning architectures for multiple object tracking.

- Separate Detection and Embedding (Re-identification) models (SDE)

- Two-stage models (detection features + embedding features)

- Joint detection and embedding (JDE) models

Real time deep learning based methods are very costly, with most SDE models running at ~6FPS. Their paper, Wang et al (2020), shows a technique for near-real time object detection at ~20FPS.

Performance Metrics for Multiple Object Tracking

MOTA : Multiple Object Tracking Accuracy

The most used metric is the MOTA (Multiple Object Tracking Accuracy), which takes into account the false positive, false negative and mismatch rate to calculate its score. It is defined as

where

- refers to the current frame/time.

- : is the number of false negatives. These are the targets that were marked as absent despite being present in the frame.

- : is the number of false positives. These are targets that were detected and tracked but did not actually exist in the frame.

- : is the number of ID switches. These are the misclassified targets i.e the tracker assigned a certain ID to a track and then changed the ID to that of another track.

- : is the number of ground truth objects in the frame.

There are some criticism about this metric but it is still one of the most widely used metrics for multiple object tracking.

Multiple Object Tracking Datasets

MOTChallenge

A sample video from https://motchallenge.net/data/MOT20/

PETS 2009 Benchmark Data

Multiple camera crowd surveillance dataset ![]()

MOTS: Multi-Object Tracking and Segmentation

![]()

UA-DETRAC Tracking dataset

Tracking multiple vehicles

Airborne Object Tracking Dataset

Tracking flying objects. Dataset available at https://gitlab.aicrowd.com

![]()

Waymo Motion dataset

Motion tracking and prediction for autonomous vehicles

![]()

WildTrack

Dense pedestrian detection and tracking

![]()

Berkeley Deep Drive Dataset

![]()

AI City Challenge

![]()

Kitti Object Tracking

![]()

Joint Track Auto

Pose estimation and tracking

![]()

PathTrack

![]()

Generic Multiple Object Tracking, GMOT-40

![]()

TAO, Tracking Any Object

PoseTrack

Pose estimation and tracking

![]()

References

- PETS2009 Dataset

- B. Yang and R. Nevatia, “Online learned discriminative partbased appearance models for multi-human tracking,” in Proc. Eur. Conf. Comput. Vis., 2012, pp. 484–498.

- Multiple Object Tracking: A Literature Review (2017), Wenhan Luo and Junliang Xing and Anton Milan and Xiaoqin Zhang and Wei Liu and Xiaowei Zhao and Tae-Kyun Kim. 1409.7618

- Towards Real-Time Multi-Object Tracking. arXiv:1909.12605 [cs.CV].